In a previous post (now several months ago — sorry!) I wrote about visualization strategies for looking at procedurally generated text from The Mary Jane of Tomorrow and determining whether the procedural generation was being used to best effect.

There, I proposed looking at salience (how many aspects of the world model are reflected by this text?), variety (how many options are there to fill this particular slot?) and distribution of varying sections (which parts of a sentence are being looked up elsewhere?)

It’s probably worth having a look back at that post for context if you’re interested in this one, because there’s quite a lot of explanation there which I won’t try to duplicate here. But I’ve taken many of the examples from that post and run them through a Processing script that does the following things:

Underline text that has been expanded from a grammar token.

Underlining is not the prettiest thing, but the intent here is to expose the template- structure of the text. The phrase “diced spam pie” is the result of expanding four layers of grammar tokens; in the last iteration, the Diced Spam is generated by one token that generates meat types, and the Pie by another token that generates types of dish.

structure of the text. The phrase “diced spam pie” is the result of expanding four layers of grammar tokens; in the last iteration, the Diced Spam is generated by one token that generates meat types, and the Pie by another token that generates types of dish.

This method also draws attention to cases where the chunks of composition are too large or are inconsistent in size, as in the case of the generated limericks for this game:

Though various factors (limerick topic, chosen rhyme scheme) have to be considered in selecting each line, the lines themselves don’t have room for a great deal of variation, and are likely to seem conspicuously same-y after a fairly short period of time. The first line of text locks in the choice of surrounding rhyme, which is part of why the later lines have to operate within a much smaller possibility space.

Increase the font size of text if it is more salient. Here the words “canard” and “braised” appear only because we’re matching a number of different tags in the world model: the character is able to cook, and she’s acquainted with French. By contrast, the phrase “this week” is randomized and does not depend on any world model features in order to appear, so even though there are some variants that could have been slotted in, the particular choice of text is not especially a better fit than another other piece of text.

Increase the font size of text if it is more salient. Here the words “canard” and “braised” appear only because we’re matching a number of different tags in the world model: the character is able to cook, and she’s acquainted with French. By contrast, the phrase “this week” is randomized and does not depend on any world model features in order to appear, so even though there are some variants that could have been slotted in, the particular choice of text is not especially a better fit than another other piece of text.

This particular example came out pretty ugly and looks like bad web-ad text even if you don’t read the actual content. I think that’s not coincidental.

Color the font to reflect how much variation was possible. Specifically, what this does is increase the red component of a piece of text to maximum; then the green component; and then the blue component. The input is the log of the number of possible variant texts that were available to be slotted into that position.

Color the font to reflect how much variation was possible. Specifically, what this does is increase the red component of a piece of text to maximum; then the green component; and then the blue component. The input is the log of the number of possible variant texts that were available to be slotted into that position.

This was the trickiest rule to get to where I wanted it. I wanted to suggest that both very high-variance and very low-variance phrases were less juicy than phrases with a moderate number of plausible substitutions. That meant picking a scheme in which low-variance phrases would be very dark red or black; the desirable medium-variance phrases are brighter red or orange; and high-variance phrases turn grey or white.

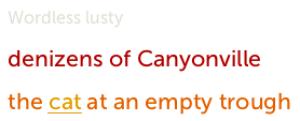

Here “wordless” and “lusty” are adjectives chosen randomly from a huge adjective list, with no tags connecting them to the model world. As a result, even though there are a lot of possibilities, they’re likely not to resonate much with the reader; they’ll feel obviously just random after a little while. (In the same way, in the Braised Butterflied Canard example above, the word “seraphic” is highly randomized.)

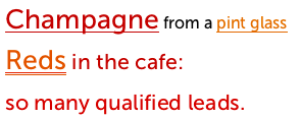

Finally, here’s the visualization result I got for the piece of generated text I liked best in that initial analysis:

We see that this text is more uniform in size and color than most of the others, that the whole thing has a fair degree of salience, and that special substitution words occur about as often as stressed words might in a poem.

*

There’s another evaluative criterion we don’t get from this strategy, namely the ability to visualize the whole expansion space implicit in a single grammar token.

By this I don’t mean the sort of generic chart you get if you look up visualize context free grammar on Google — those are generally aimed at understanding the structural space for the purposes of parsing rather than generation, and in particular aren’t designed to help the user visualize the richness of the space they’re creating.

But having some such strategy is important because (as I found working on both Annals of the Parrigues and The Mary Jane of Tomorrow) it’s easy to get bogged down expanding the text space in ways that don’t help very visibly or even that are actively counterproductive in terms of the reader/player’s experience of the generated text.

For instance, let’s go back to the following table from Mary Jane, which describes the ways that the tag [fruit]” might be expanded:

Table of Dubious Fruits

tag-list description

{ { } } “[one of]Lime[or]Orange[or]Banana[or]Pear[or]Lemon[or]Pineapple[or]Cranberry[or]Apple[at random]”

{ { cowgirl, pos } } “Prickly Pear”

{ { french, pos } } “[one of]Fraise[or]Framboise[at random]”

{ { innuendo, pos } } “[one of]Mango[or]Passionfruit[at random]”

{ { botany, pos } } “[Table of Cultivars type] Apple”

This table means that, if no world model features apply, the player could receive any of the eight random fruits listed on the first line. If they’re training a cowgirl, “Prickly Pear” comes into play as an option; if French, “Fraise/Framboise” becomes available, and so on. [Table of Cultivars] actually calls out to a whole other massive expansion table, containing hundreds of apple species names, which I unapologetically yanked from Darius Kazemi’s corpora set. In theory, the presence of that table massively expands the number of conceivable fruit descriptions in the game, but in practice, it’s far enough down the chain of grammar, and contingent enough on other world model features, that it is unlikely to be consulted very often. Putting it there was fun, and I enjoyed having really specific species names available for the botanical version of the robot, but if my aim had strictly been to increase the experienced richness and variety of the text, that addition would’ve been a big waste of time.

There’s something even worse one could do, though, which is to start with this:

Table of Dubious Fruits

tag-list description

{ { } } “[one of]Lime[or]Orange[or]Banana[or]Pear[or]Lemon[or]Pineapple[or]Cranberry[or]Apple[at random]”

where the player has a 1 in 8 chance of randomly getting each fruit, and expand it to this:

Table of Dubious Fruits

tag-list description

{ { } } “[one of]Lime[or]Orange[or]Banana[or]Pear[or]Lemon[or]Pineapple[or]Cranberry[or]Apple[at random]”

{ { } } “Prickly Pear”

…where the player now has a 1/16 chance of getting any of the first fruits, and a 1/2 chance of getting Prickly Pear, with the result that, though I just added content, the player is much likelier to see repetitions, and it will feel as though the content space is actually smaller.

This is a deliberately simple example in order to illustrate the point, but the same problem can arise in sneakier ways, if we add a grammar token with only a few possible expansions alongside another token with many possible expansions.

All kinds of other implementation complications can come into play here: some procedural grammar systems also use percentages to control the rate at which particular elements are used, for instance. So one possible strategy would be to make the system self-balancing — able to assess how many possible expansions there were down each grammatical route and then set its own probabilities accordingly, so that it would select the 8-fruit token eight times out of nine, and the 1-fruit token only one time in nine.

This would, I think, require that there not be any loops in the grammar production (or else that the system halted its self-analysis if it discovered any). And, of course, perfect balance of production is not necessarily what we’re trying for anyway.

I’m still thinking about some of the visualization angles on this one.

Interesting post, and it’s good to know you are continuing to work on procedurally generated prose (and limericks!). I wanted to say that I actually greatly enjoyed your Annals of the Parrigues, and have been looking forward to more from you along those lines – I hadn’t heard about The Mary Jane of Tomorrow but will try it out quicker than you can procedurally generate 17 permutations of Diced Spam Pie.

I was wondering if you’d been following some of the recent games criticism discussing where procedural content has ultimately failed to be very interesting or engaging (in games such as Spore or No Man’s Sky), and might have some general thoughts about how procedurally generated text content can potentially be made to resonate more strongly than was the case for the largely graphical reskins in those games. Much of the discussion has focused on what Kate Compton has called the problem of “procedural oatmeal” – i.e. it is very easy to pour 10,000 bowls of plain oatmeal, with each oat being in a different position and each bowl essentially unique, but it is very hard to make these differences *matter* to an audience, and be perceived as truly different in any memorable or thought-provoking way.

http://motherboard.vice.com/read/no-mans-sky-review

It seems to me that well-crafted procedurally generated text (even non-interactive text like the Annals were) may have much greater potential to create something truly interesting for human audiences. For me at least, the Annals were a surprisingly engaging diversion; I read them straight through over Christmas and thoroughly enjoyed myself. I also enjoyed reading about their generation at the end – I think it helped that there were multiple hidden layers to the generation process (the tarot system giving a subtly idiosyncratic mood or tone to each province, overlaid with the evolving relationship between the narrators sometimes modifying how their surroundings were described), and this made differences between provinces much more nuanced and unexpected. Unlike procgen graphics, text can describe a large number of subtle differences (cultural, interpersonal, historical) that are important to people, and throw them together in unexpected ways that can sometimes have thought-provoking results. As a result, I found that this quaint little travelogue held my interest even when its procedurally generated nature was often very salient, while I expect visiting as many different gorgeously colored planets and animals in No Man’s Sky could start to pale rapidly (though I haven’t played that yet, as a disclaimer).

Anyway, consider this a call for further exploration of procedurally-generated literature of any kind (be it IF, limericks, or noninteractive prose). Some potentially interesting directions could be:

1) a noninteractive work like the Annals, where the reader can adjust certain seed parameters and regenerate the work, either to achieve a goal or just explore the possible states for their own sake

2) true procedurally generated IF: perhaps an attempt at open-world exploration along the lines of No Man’s Sky, with the aim of finding out whether this can be made interesting enough that people actually want to explore it

Thanks for that extremely interesting post!

Speaking only for myself, obviously, one of the hitches with using procgen text to describe those subtle differences is that it’s hard to get the text to run together without throwing the procedural generation in the reader’s face, and that tends to override the subtlety. If you have four procedurally generated graphic models and you put them together it looks like something, without much effort required on your part. If you have some procedurally generated sentences and you put them together it reads like (one of mine):

“To the west you can see a disturbance in the air.

To the northwest you can see something.

To the northwest you can see something brown.”

which is clunkily mechanical. And it takes a lot of effort to make it not mechanical. Annals of the Parrigues is truly exceptional among the procedurally generated prose I’ve read (not that there aren’t great things–I Waded In Clear Water is amazing, but it’s not meant to read naturally). Even making English articles behave properly can be non-trivial.

Toward the end of the article, you mentioned the option of selecting uniformly across the space of all possibilities. For one simple project I made (currently located at https://rocketnia.github.io/monday-comics/), I did that. I had it track how many possibilities in total could be produced by any given production rule, so whenever it was expanding a rule, it could look up how many possibilities were along each branch and weight its immediate choice based on that. I was already determined not to have any loops, so I didn’t mind that limitation.

I wasn’t sure I liked the resulting distribution at first. It gives preference to root structures that have a lot of moving parts, making almost every result a high-complexity result. Yet, despite the overwhelming complexity, the root structure itself gets samey fast. I addressed this by simplifying some complex options that were hogging the spotlight and adding a little more complexity to options I liked that weren’t being selected.

So the opinion I came to in that project was that I wanted the immediate expansions of a rule to be almost balanced with each other in terms of the number of full expansions they each lead to. In other words, for most of my written content, I’d like (selecting uniformly at random from all possibilities) and (selecting uniformly at random from the immediate expansions of each production rule along the way) to be roughly equivalent distributions.

If I have a good reason to diverge from this, I figure I should probably spend time tuning and testing to be able to substantiate that good reason. The ongoing progress of that effort can be accounted for in the form of an explicit weight annotation; as I devote my attention to that effort, I make tweaks to the weight annotation, and the annotation itself calls my attention to the fact that there’s something to maintain here. So, it could be interesting to pursue a tool where if two expansions of a production rule lack weight annotations *and* have much differently sized possibility spaces, they’re automatically highlighted or warned about.

How much is “much differently sized” seems like a matter of degree, so colors and sizes like you’re using, or like Ice-Bound has used (http://ice-bound.com/news/visualizing-the-combinatorial/), is a good idea. In particular, where you’re coloring parts of a generated text to indicate whether they vary too much or too little, maybe the source code could be colored by the same scheme, highlighting the expansions of a production rule relative to each other.

…And this is leading me to some other rather specific design thoughts: Instead of weighting an expansion with an absolute percentage chance, maybe it would make more sense for the annotation to be a function of other expansions’ weights, sort of to say “Yes, I do expect this to be picked half as much as the others,” or to say “Yes, I do expect this to be picked as often as hypothetical options X and Y put together.” That way, even an annotated expansion can be highlighted according to whether the expected imbalance is up-to-date relative to the actual imbalance.

As always, thanks for sharing the considerations you’re trying to address and the techniques you’re using to address them. I hoped this anecdote of mine could give back a bit, but I didn’t know I’d come out of this comment with a better idea of what kind of weighting system to try. :)

“I wasn’t sure I liked the resulting distribution at first. It gives preference to root structures that have a lot of moving parts, making almost every result a high-complexity result. Yet, despite the overwhelming complexity, the root structure itself gets samey fast. I addressed this by simplifying some complex options that were hogging the spotlight and adding a little more complexity to options I liked that weren’t being selected.”

This squares with my experience, such as it is. Here’s some output from Garbage Collection, which is a small generator done in a hurry (with the “do you want to continue” prompts stripped):

IMO things like “A jagged piece of glass. A smashed plastic panel. A fleshy blob. A jagged piece of plastic” feel less samey than “A sharp piece of glass is stuck to a cracked metal panel. A white liquid spreads across a plastic panel. A pink slick stains a frayed rope fragment. A fleshy chunk is smeared on a fragment of wood. Out of a fragment of rope wells a green secretion. Into a mass of meat sinks a maroon ooze.” The shorter ones are in a way more repetitive, but repeated short bits are more natural-sounding than repeated medium-length bits with the same rhythm. And the second excerpt doesn’t even come from the same root structure (there’s two each of solid thing attached to solid thing, liquid described before solid thing, and solid thing described before liquid)–which is I guess part of the problem, because I have several different root structures that produce sentences with similar lengths and rhythms.

A bit of writing advice that I read somewhere is to vary the lengths of your sentences. That seems particularly important for procgen text–maybe it’s important to bias the generator toward sentences that don’t have the same complexity/rhythm/length as the last one you generated, and also to try to be sparing with the highest-complexity ones (the ones Stella Gibbons would mark with three asterisks).

This is awesome. Thank you for posting it!

Regarding expanding a context-free grammar “uniformly” even with loops:

Essentially, the problem is that a context-free grammar with loops usually has an infinite number of possible parse trees. There is no uniform probability distribution over a countably infinite set. By choosing a bijection between the parse trees and the natural numbers, you can offer the user the choice of distribution over the natural numbers – possibly a nice smooth exponential function, or perhaps they would like a uniform distribution over the first ten million numbers, or some combination – uniform up to a soft limit then tailing off with an exponential.

Then you can generate a random parse tree from the grammar by choosing a random number from the distribution, and putting it through the bijection to find the corresponding parse tree.

So the only slightly tricky part is writing code that takes an arbitrary context free grammar, and outputs a bijective function from the natural numbers to the parse trees of that grammar. That’s what I’ve done – the code’s very clumsy but it does work, and you can contact me at my-first-name@the-same-name.com if you want to look at it.

The usual way (that, if I understand correctly, Tracery uses) of generating a parse tree from a context free grammar is unfortunately prone to explosions if the grammar includes a rule like “foo: [“bar”, “#foo# #foo# #foo#”, “#foo# #foo# #foo# #foo#”]. The problem is that on average, expanding a single #foo# in a partially-expanded parse tree will increase the number of #foo# to be expanded, not decrease it, which means that there is (in principle) a nonzero probability that the expansion process will go on forever.

In practice what happens is that something bottoms out – the call stack, or some size limit – and either the system finishes off the tree in a hurry somehow or throws the partially-expanded parse tree away entirely and tries again.