Over the years since I first ran it, I’ve made a few iterations of my narrative (large-)party game San Tilapian Studies. It’s also been run by other people with their own versions of the stickers and text, and even been made available to the public at the Wellcome Play Spectacular.

In 2015, I offered a version of it as an IF Comp prize, and the winner requested that I adapt it to a particular fantasy world idea he had in mind. That version was mechanically pretty similar to the original — partygoers are given stickers with nouns or descriptive phrases on them, and must find other participants that are a good match for them in order to make a three-segment-long description of an object. Then they make up some backstory about that object and write it in the book.

The main tweaks I made were:

- the game package also included a fantasy outline map that players could use to locate places in their game world

- the sticker collection included a few wild-card words, words that could be played as either a noun or an adjective (like “bone” or “mirror”). The intent there was to help keep things moving smoothly if people felt they were running out of plausible matches

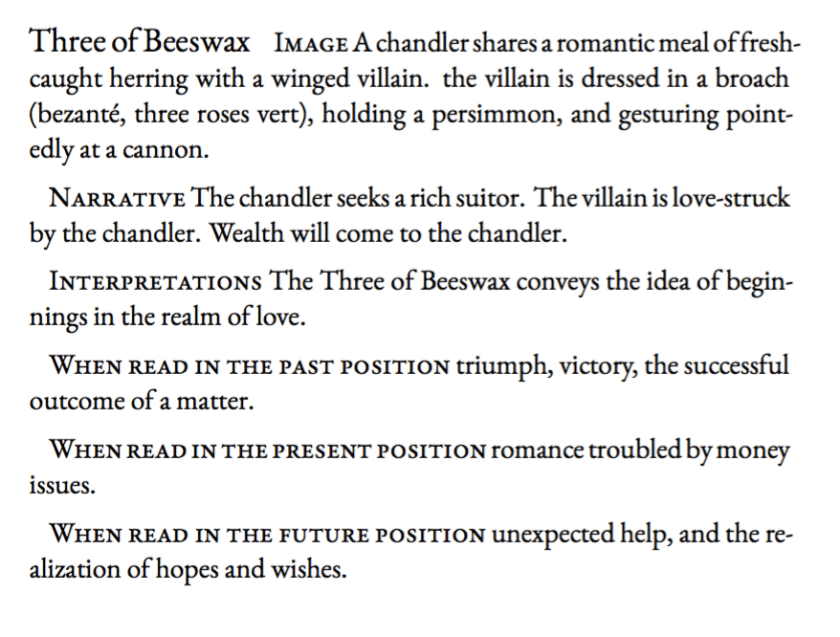

This year I offered the San Tilapian Studies kit again. One of the winners requested the original version, which is cool — it’s fairly easy for me to do that, and I think the Ruritanian romance setting provides a lot of room to imagine different miniature plot lines: love stories, politics, military affairs, interpersonal intrigue, etc., all fit into that world.

The other winner asked for a Lovecraftian re-skin of the set, and that was a bigger revision.

The rest of this post is about the design work I did to adapt San Tilapian Studies, first for a minor upgrade and second for a larger re-skin. While the result isn’t played on a computer, this is an exercise in developing a corpus for procedural use.

Designing, testing, and editing a corpus is in fact a significant part of the work in any procedural text project — so while this is a bit different from, say, Annals of the Parrigues in that it doesn’t result in a finished single text, a lot of related issues come into the corpus design.